Point releases are a grand tradition of the software industry. The practice of designating an update “2.0” is now frequently applied to entire technologies. Perhaps the most famous example of the phenomenon is the christening of “Web 2.0” by Dale Dougherty and Tim O’Reilly in 2003 to mark the arrival of “the web as platform”: Users are creators rather than simply consumers of data and services.

It didn’t take long for the “2.0” moniker to be applied to cloud computing. References to “Cloud 2.0” appeared as early as 2010 in an InformationWeek article by John Soat, who used the term to describe the shift by organizations to hybrid clouds. As important as the rise of hybrid clouds has been, combining public and private clouds doesn’t represent a giant leap in technology.

So where is that fundamental shift in cloud technology justifying the “2.0” designation most likely to reveal itself? Here’s an overview of the cloud innovations vying to become the next transformative technology.

Google’s take on Cloud 2.0: It’s all about machine-learning analytics

The company with the lowest expectation for what it labels Cloud 2.0 is Google, which believes the next great leap in cloud technology will be the transition from straightforward data storage to the provision of cloud-based analytics tools. Computerworld‘s Sharon Gaudin cites Google cloud business Senior VP Diane Greene as saying CIOs are ready to move beyond simply storing data and running apps in the cloud.

Greene says data analytics tools based on machine learning will generate “incredible value” for companies by providing insights that weren’t available to them previously. The tremendous amount of data being generated by businesses is “too expensive, time consuming and unwieldy to analyze” using on-premise systems, according to Gaudin. The bigger the data store, the more effective machine learning can be in answering thorny business problems.

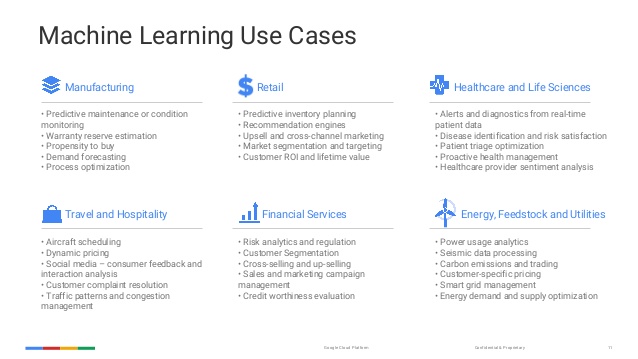

Gaudin quotes an executive for a cloud consultancy who claims Google’s analytics tools are perceived to have “much more muscle” than those offered by Amazon Web Services. The challenge for Google, according to the analyst, is to show potential customers the use cases for its analytics and deep learning expertise. Even though companies have more data than they know what to do with, they will hesitate before investing in cloud-based analytics until they are confident of a solid return on that investment in the form of fast and accurate business intelligence.

Companies are expected to balk at adopting Google’s machine learning analytics until they are convinced that there are legitimate use cases for the technology. Source: Kunal Dea from Google Cloud Team, via Slideshare

The race to be the top AI platform is wide open

After decades of hype, artificial intelligence is a technology whose time has finally come. Machine learning is a key component of commercial AI implementations. A May 21, 2017, article on Seeking Alpha cites a study by Accenture that found AI could increase productivity by up to 40 percent in 2035. AI wouldn’t be possible without the cloud’s massively scalable and flexible storage and compute resources. Even the largest enterprises would be challenged to meet AI’s appetite for data, power, bandwidth, and other resources by relying solely on in-house hardware.

The Seeking Alpha article points out that Amazon’s big lead in cloud computing is substantial, but it is far from insurmountable. The market for cloud services is “still in its infancy,” and the three leading AI platforms – Amazon’s open machine learning platform, Google’s TensorFlow, and Microsoft’s Open Cognitive Toolkit and Machine Learning – are all focusing on attracting third-party developers.

_original.jpg)

AWS’s strong lead in cloud services over Microsoft, Google, and IBM is not as commanding in the burgeoning area of AI, where competition for the attention of third-party developers is fierce. Source: Seeking Alpha

Google’s TensorFlow appears to have a head in front of the AI competition right out of the gate. According to The Verge, TensorFlow is the most popular software of its type on the Github code repository; it was developed by Google for its in-house AI work and was released to developers for free in 2015. A key advantage of TensorFlow is the ability of the two billion Android devices in use each month to be optimized for AI. This puts machine learning and other AI features in the hands of billions of people.

Plenty of ‘Superclouds’ to choose from

Competition is also heating up for ownership of the term “supercloud.” There is already a streaming music service using the moniker, as well as an orchestration platform from cloud service firm Luxoft. That’s not to mention the original astronomical use of the term that Wiktionary defines as “a very large cloud of stellar material.”

Two very different “supercloud” research projects are pertinent to next-generation cloud technology: one is part of the European Union’s Horizon 2020 program, and the other is underway at Cornell University and funded by the National Science Foundation. Both endeavors are aimed at creating a secure multicloud environment.

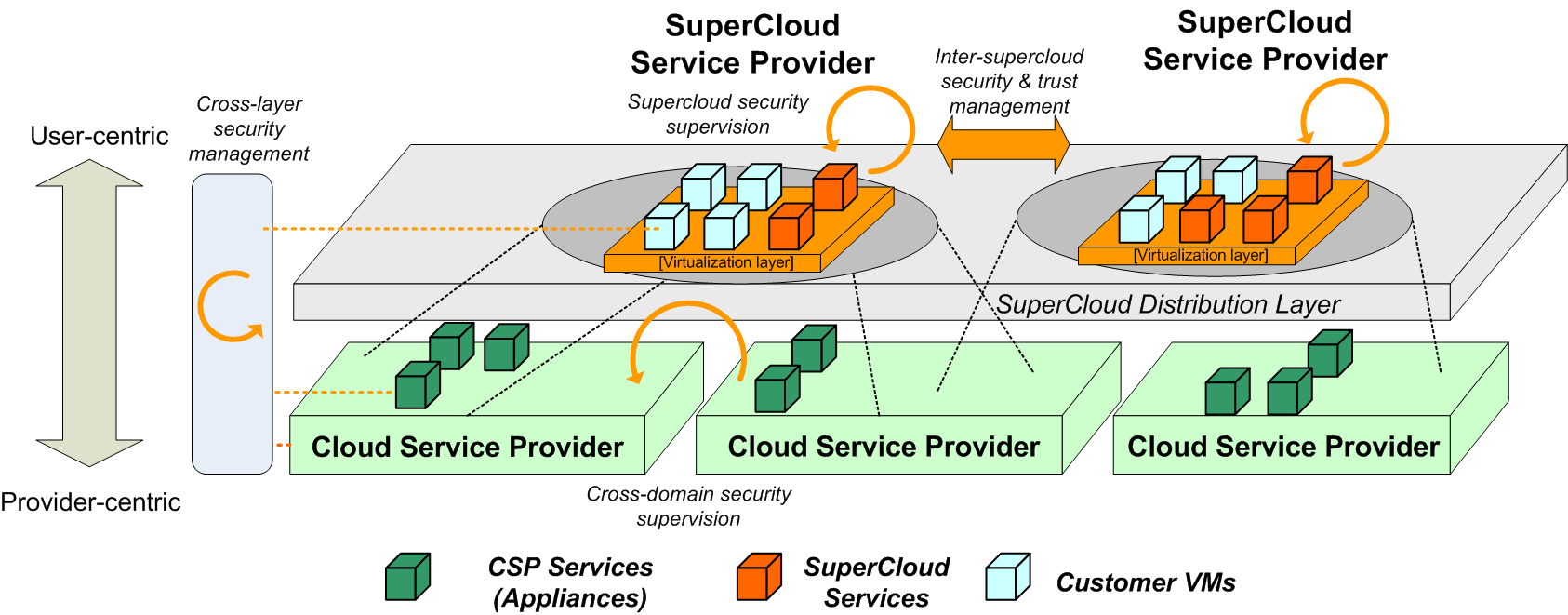

The EU’s Supercloud project is designed to allow users to craft their own security requirements and “instantiate the policies accordingly.” Compliance with security policies is enforced automatically by the framework across compute, storage, and network layers. Policy enforcement extends to “provider domains” by leveraging trust models and security mechanisms. The overarching goal of the project is the creation of secure environments that apply to all cloud platforms, and that are user-centric and self-managed.

The EU’s Supercloud program defines a “distributed architectural plane” that links user-centric and provider-centric approaches in expanded multicloud environments. Source: Supercloud Project

A different approach to security is at the heart of the Supercloud research being conducted at Cornell and led by Hakim Weatherspoon and Robbert van Renesse. By using “nested virtualization,” the project packages virtual machines with the network and compute resources they rely on to facilitate migration of VMs between “multiple underlying and heterogeneous cloud infrastructures.” The researchers intend to create a Supercloud manager as well as network and compute abstractions that will allow apps to run across multiple clouds.

The precise form of the next iteration of cloud computing is anybody’s guess. However, it’s clear that AI and multicloud are technologies poised to take cloud services by storm in the not-too-distant future.