Data centers are evolving from walled-in, self-contained hardware and software systems into cloud gateways. The platforms companies relied on just a decade ago are now destined for computer museums. As the cloud transition continues, the roles of in-house IT will be very different, but just as vital to the organization’s success as ever.

Oracle/mainframe-type legacy systems have short-term value in the “pragmatic” hybrid clouds described by David Linthicum in a September 27, 2017, post on the Doppler. In the long run, however, the trend favoring OpEx (cloud services) over CapEx (data centers) is unstoppable. The cloud offers functionality and economy that no data center can match.

Still, there will always be some role for centralized, in-house processing of the company’s important data assets. IT managers are left to ponder what tomorrow’s data centers will look like, and how the in-house installations will leverage the cloud to deliver the solutions their customers require.

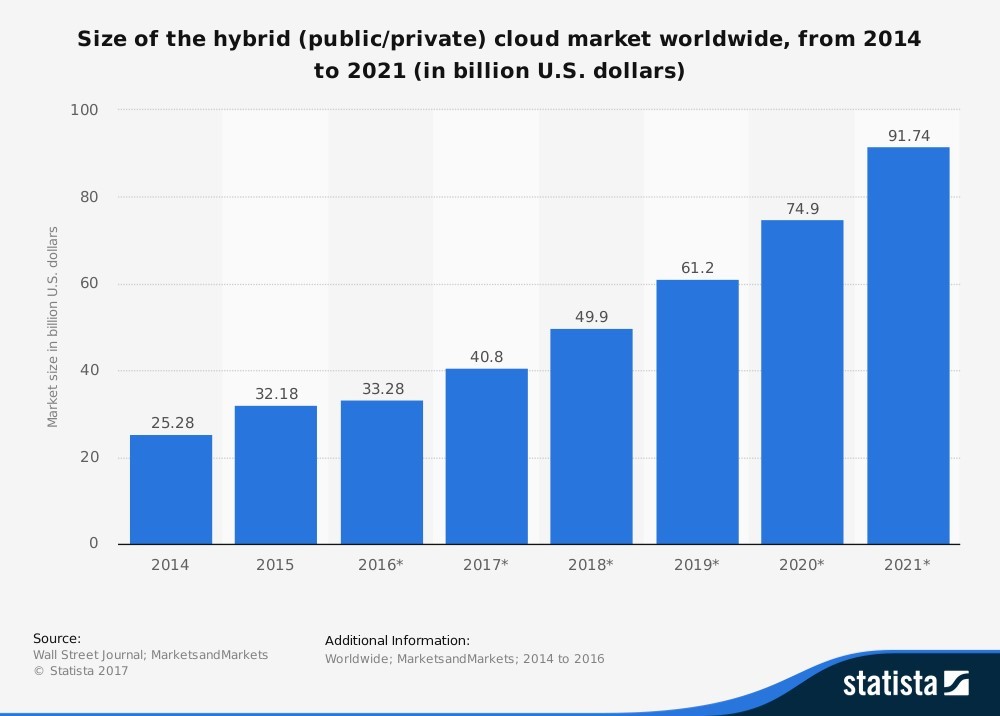

Spending on hybrid clouds in the U.S. will nearly quadruple between 2014 and 2021. Source: Statistica, via Insight

Two disparate networks, one unified workflow

The mantra of any organization planning a hybrid cloud setup is “Do the work once.” Trend Micro’s Mark Nunnikhoven writes in an April 19, 2017, article that this ideal is nearly impossible to achieve due to the very different natures of in-house and cloud architectures. In reconciling the manual processes and siloed data of on-premises networks with the seamless, automated workflows of cloud services, some duplication of effort is unavoidable.

The key is to minimize your reliance on parallel operations: one process run in-house, another run for the same purpose in the cloud. For example, a web server in the data center should run identically to its counterparts deployed and running in the cloud. The first step in implementing a unified workflow is choosing tools that are “born in the cloud,” according to Nunnikhoven. The most important of these relate to orchestration, monitoring/analytics, security, and continuous integration/continuous delivery (CI/CD).

Along with a single set of tools, managing a hybrid network requires visibility into workloads, paired with automated delivery of solutions to users. In both workload visibility and process automation, cloud services are ahead of their data center counterparts. As with the choice of cloud-based monitoring tools, using cloud services to manage workloads in the clouds and on-premises gives you greater insight and improved efficiency.

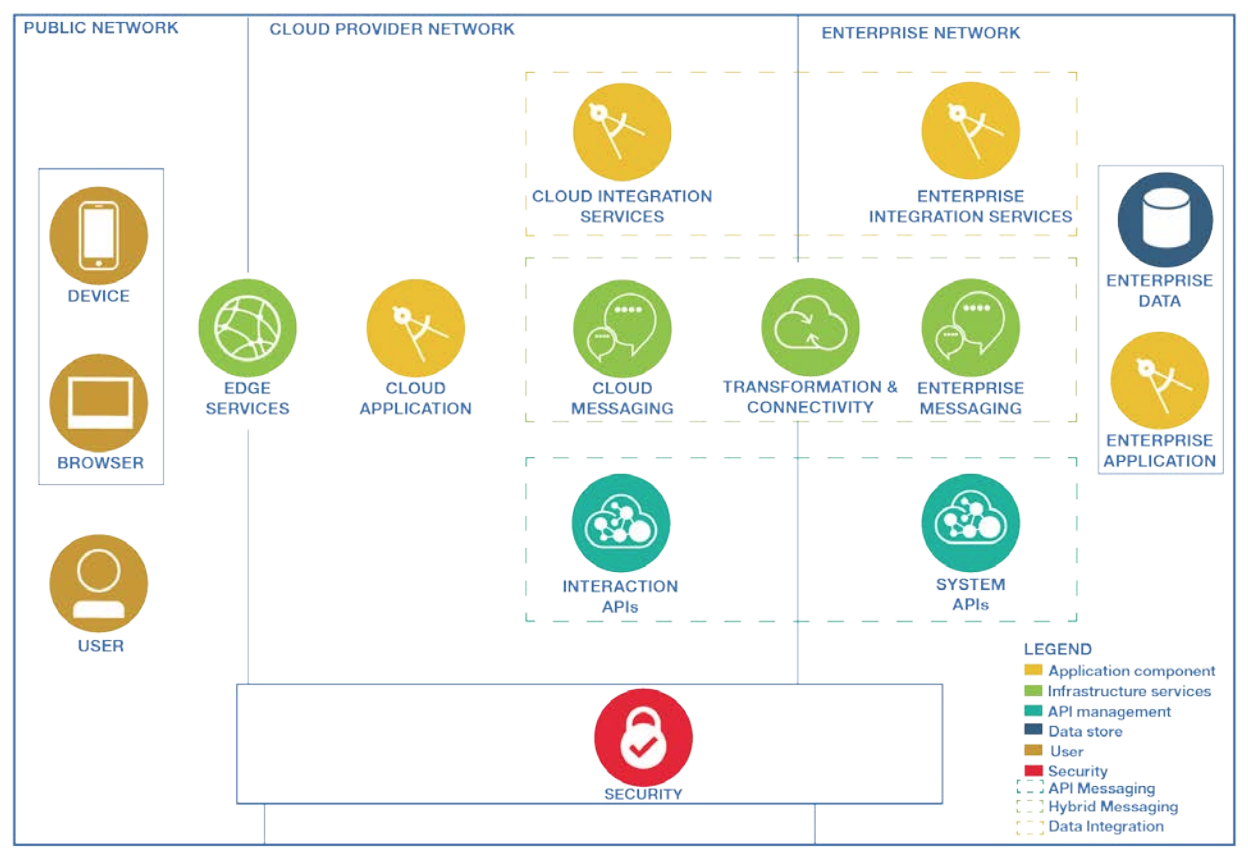

The components of a Cloud Customer Hybrid Integration Architecture encompass the public network, a cloud provider network, and an enterprise network. Source: Cloud Standards Customer Coucil

Process automation is facilitated by orchestration tools that let you automate the operating system, application, and security. Wrapping the components in a single package makes one-click deployment possible, in addition to other time- and resource-saving techniques. Convincing staff of the benefits of applying cloud tools and techniques to the management of in-house systems is often the most difficult obstacle encountered when transitioning from traditional data centers to cloud services.

The view of hybrid clouds from the data center out

Role reversals are not uncommon in the tech industry. However, it took decades to switch from centralized mainframes to decentralized PC networks and back to centralized (and virtualized) cloud servers. The changes today happen at a speed that the most agile of IT operations find difficult to keep pace with. Kim Stevenson, VP and General Manager of data center infrastructure at Lenovo, believes the IT department’s role is now more important than ever. As Stevenson explains in an October 5, 2017, article on the Stack, the days of simply keeping pace with tech changes are over. Today, IT must drive change in the company.

The only way to deliver the data-driven tools and resources business managers need is by partnering with the people on the front lines of the business, working with them directly to make deploying and managing apps smooth, simple, and quick. As the focus of IT shifts from inside-out to outside-in, the company’s success depends increasingly on business models that support “engineering future-defined products” using software-defined facilities that deliver business solutions nearly on demand.

Data center evolution becomes a hybrid-cloud revolution

Standards published by the American National Standards Institute (ANSI) and the Telecommunications Industry Association (TIA) define four data center tiers: from a basic modified server room with a single, non-redundant distribution path (tier one) to one with redundant capacity components and multiple independent distribution paths. A tier one data center offers limited protection against physical events, while a tier four implementation protects against nearly all physical events and supports concurrent maintainability, so a single fault will not cause downtime.

These standards addressed the needs of traditional client-server architectures characterized by north-south traffic, but they come up short when applied to server virtualization’s primarily east-west data traffic. Network World’s Zeus Karravala writes in a September 25, 2017, article that the changes this fundamental shift will cause in data centers have only begun.

Hybrid cloud dominates the future plans of enterprise IT departments: 85 percent are based on multi-clouds, the vast majority of which will be hybrid clouds. Source: RightScale 2017 State of the Cloud Report

Karravala cites studies conducted by ZK Research that found 80 percent of companies will rely on hybrid public-private clouds for their data-processing needs. Software-defined networks are the key to achieving the agility future data-management tasks will require. SDNs will create hyper-converged infrastructures to provide the servers, storage, and networks modern applications rely on.

Two technologies that make HCIs possible are containerization and micro-segmentation: the former allows an entire runtime environment to be virtualized nearly instantaneously because it can be created and destroyed quickly; while the latter supports predominant east-west data traffic by creating secure zones that allow data to bypass firewalls, intrusion prevention tools, and other security components.

Laying the groundwork for the cloud-first data center

IT executives are eternal optimists. The latest proof is evident in the results of a recent Intel survey of data security managers. Eighty percent of the executives report their organizations have adopted a cloud-first strategy that they expect to take no more than one year to implement. What’s striking is that the same survey conducted one year earlier reported the same figures. Obviously, cloud-first initiatives are taking longer to put in place than expected.

Sometimes, an unyielding “cloud first” policy results in square pegs being forced into round holes. TechTarget’s Alan R. Earls writes in a May 2017 article that often the people charged with implementing their companies’ cloud-first plan can’t explain what benefits they expect to realize as a result. Public clouds aren’t the answer for every application. In addition to the organization’s experience level with cloud services and the availability of cloud resources, some apps simply work better and/or run more reliably and efficiently in-house.

An application portfolio assessment identifies the systems that belong on the cloud, particularly those that take the best advantage of containers and microservices. For a majority of companies, their data center of the future will operate as the private end of an all-encompassing hybrid cloud.