Everybody’s talking — to cloud-connected devices. The question is whether your company has the speech APIs it will need to collect, analyze, and act on valuable speech data.

Many people see natural language processing as the leading edge of artificial intelligence inroads into everyday business activities. The AI-powered voice technologies at the heart of popular virtual assistants such as Apple’s Siri, Microsoft’s Cortana, Amazon’s Alexa, and Samsung’s Bixby are considered “less than superhuman,” as IT World’s Peter Sayer writes in a May 24, 2017, article.

Any weaknesses in the speech AI present in those consumer products are easier for many companies to abide because the systems are also noted for their ability to run on low-power, plain vanilla hardware — no supercomputers required.

Coming soon to a cloud near you: Automatic speech analysis

Machine voice analysis took a big step forward with the recent general availability of Google’s Cloud Speech API, which was first released as an open beta in the summer of 2016. The Cloud Speech API uses the same neural-network technology found in the Google Home and Google Assistant voice products. As InfoQ’s Kent Weare writes in a May 6, 2017, article, the API supports services in 80 different languages.

Google product manager Dan Aharon cites three typical human-computer use cases for the Cloud Speech API: mobile, web, and IoT.

Human-computer interactions that speech APIs facilitate include search, commands, messaging, and dictation. Source: Google, via InfoQ

Among the advantages of cloud speech interfaces, according to Aharon, are speed (150 words per minute, compared to 20-40 wpm for typing); interface simplicity; hands-free input; and the increasing popularity of always-listening devices such as Amazon Echo, Google Home, and Google Pixel.

A prime example of a lost voice-based resource for businesses is customer-service phone calls. Interactive Tel CTO Gary Graves says the Cloud Speech API lets the company gather “actionable intelligence” from recordings of customer calls to the service department. In addition to providing managers with tools for improving customer service, the intelligence collected holds employees accountable. Car dealerships have shown the heightened accountability translates directly into increased sales.

Humanizing and streamlining automated voice interactions

Having to navigate through multi-level trees of voice-response systems can make customers pine for the days of long hold times. Twilio recently announced the beta version of its Automated Speech Recognition API that converts speech to text, which lets developers craft applications that respond to callers’ natural statements. Compare: “Tell us why you’re calling” to “If you want to speak to a support person, say ‘support’.” Venture Beat’s Blair Hanley Frank reports on the beta release in a May 24, 2017, article.

Twilio’s ASR is based on the Google Cloud Speech API; it processes 89 different languages and dialects, and it costs from two cents for each 15 seconds of recognition. Also announced by Twilio is a Universal API that delivers to applications information about the intent of the natural language it processes. The Understand API works with Amazon Alexa in addition to Twilio’s own Voice and SMS tools.

Could Amazon’s cloud strength be a weakness in voice services?

By comparison, Amazon’s March 2017 announcement of the Amazon Connect cloud-based contact center attempts to leverage its built-in integration with Amazon DynamoDB, Amazon Redshift, Amazon Aurora, and other existing AWS services. TechCrunch’s Ingrid Lunden writes in a March 28, 2017, article that Amazon hopes to leverage its low costs compared to call centers that are not cloud-based, as well as requiring no up-front costs or long-term contracts.

A key feature of Amazon Connect is the ability to create automatic responses that integrate with Amazon Alexa and other systems based on the Amazon Lex AI service. Rather than being an advantage, the tight integration of Amazon Connect with Lex and other AWS offerings could prove to be problematic for potential customers, according to Zeus Kerravala in an April 25, 2017, post on No Jitter.

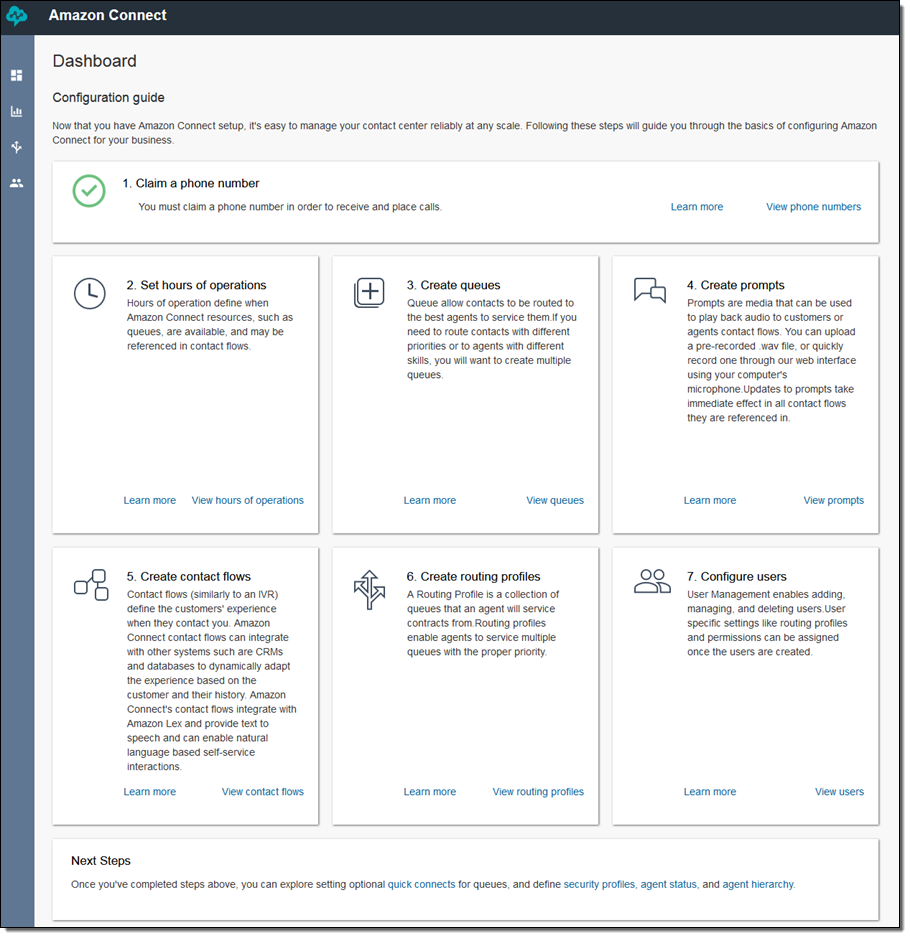

Kerravala points out that the “self-service” approach Amazon takes to the deployment of a company’s custom voice-response system presents a challenge for developers. Businesses wishing to implement Amazon Connect must first complete a seven-step AWS sign-up process that includes specifying the S3 bucket to be used for storage.

The Amazon Connect contact center requires multiple steps to configure for a specific business once you’ve completed the initial AWS setup process. Source: Amazon Web Services

Developers aren’t the traditional customers for call-center systems, according to Kerravala, and many potential Amazon Connect customers will struggle to find the in-house talent required to put the many AWS pieces together to create an AI-based voice-response system.

The democratization of AI, Microsoft style

In just two months, Microsoft’s Cognitive Toolkit open-source deep learning system went from release candidate to version 2.0, as eWeek’s Pedro Hernandez reports in a June 2, 2017, article. Formerly called the Computational Network Toolkit (CNTK), the general-availability release features support for the Keras neural network library, whose API is intended to support rapid prototyping by taking a “user-centric” approach. The goal is to allow people with little or no AI experience to include machine learning in their apps.

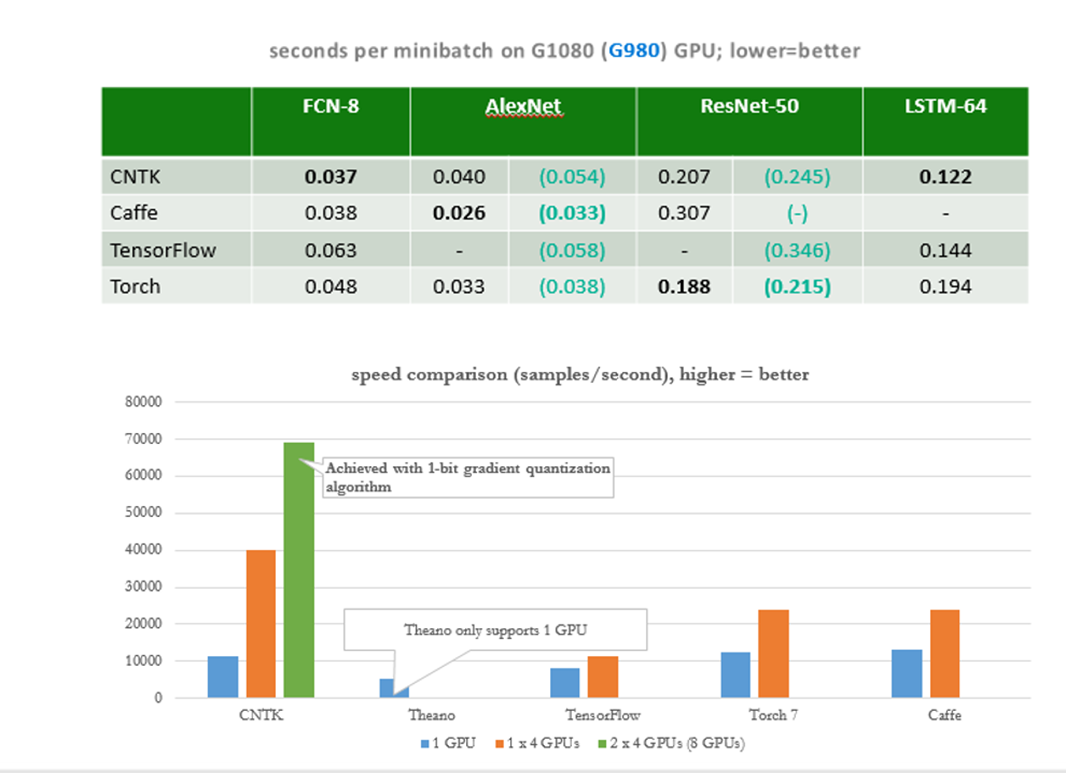

Microsoft claims its Cognitive Toolkit delivers clear speed advantages over Caffe, Torch, and Google’s TensorFlow via efficient scaling in multi-GPU/multi-server settings. Source: Microsoft Developer Network

According to Microsoft, three trends are converging to deliver AI capabilities to everyday business apps:

TechRepublic’s Mark Kaelin writes in a May 22, 2017, article that Microsoft’s Cognitive Services combine with the company’s Azure cloud system to allow implementation of a facial recognition function simply by adding a few lines of code to an existing access-control app. Other “ready made” AI services available via Azure/Cognitive Services mashups are Video API, Translator Speech API, Translator Text API, Recommendations API, and Bing Image Search API; the company promises more such integrated services and APIs in the future.

While it is certain that AI-based applications will change the way people interact with businesses, it is anybody’s guess whether the changes will improve those interactions, or make them even more annoying than many automated systems are today. If history is any indication, it will likely be a little of both.