“I didn’t realize we were wasting so much time and money.”

That is the most likely reaction to your next cloud audit, particularly if it has been more than a few months since your last cloud-spending review. If your management checks aren’t happening at cloud speed, it’s likely you can squeeze more cycles out of whatever amount you’ve budgeted for cloud services.

Keep these six tips in mind when prepping for your next cloud cost accounting to maximize your benefits and minimize waste without increasing the risks to your valuable data resources.

1. Don’t let the cloud’s simplicity become a governance trap. As Computerworld‘s John Edwards writes, “It’s dead simple to provision infrastructure resources in the cloud, and just as easy to lose sight of… policy, security, and cost.”

Edwards cites cloud infrastructure consultant Chris Hansen’s advice to apply governance from the get-go by relying on small iterations that are focused on automation. Doing so allows problems related to monitoring/management, security, and finance to surface and be remedied quickly. Hansen states that an important component of cost control is being prepared: you have to make it crystal clear who in the organization is responsible for cloud security, backups, and business continuity.

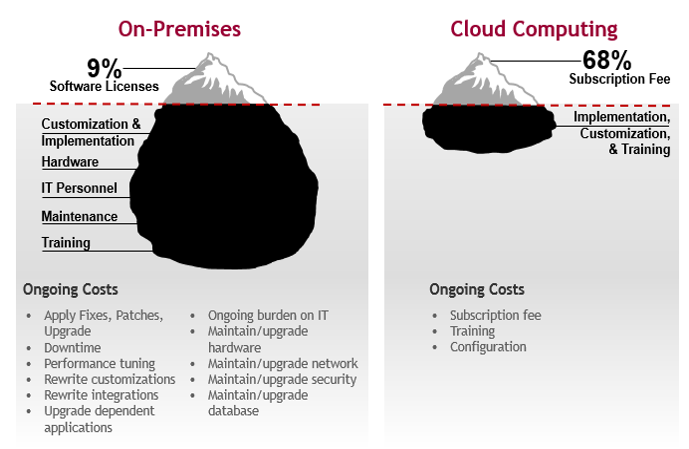

2. Update your TCO analysis for cloud-based management. A mistake that’s easy make when switching to cloud infrastructure is applying the same total cost of ownership metrics for cloud spends that you used when planning the budget for your in-house data center. For example, a single server running 24/7 in a data center won’t affect the facility’s utility bill much, but paying for a virtual cloud server’s idle time can triple your cloud bill, according to Edwards.

Subscription fees dominate cloud total-cost-of-ownership calculations, while TCO for on-premises IT is based almost entirely on such ongoing costs as maintenance, downtime, performance tuning, and hardware/software upgrades. Source: 451 Research, via Digitalist

A related problem is the belief that a “lift and shift” migration is the least expensive approach to cloud adoption. In fact, the resulting cloud infrastructure wastes so many resources you end up losing the efficiency and scalability benefits that motivated the transition in the first place. The pennywise approach is to invest a little time and money up front to redesign your apps to take advantage of the cloud’s cost-saving potential.

3. Monitor cloud utilization to right-size your instances. Determining the optimal size of the instances you create when you port in-house servers to the cloud doesn’t have to be a guessing game. Robert Green explains in an article on TechTarget how to use steady state average utilization to capture server usage over a set period. Doing so lets you track the current use of server CPU, memory, disk, and network.

Size instances based on the average use over 30 to 90 days correlate to user sessions or another key metric. Any spikes in utilization can be accommodated via autoscaling, which is key to realizing cloud efficiencies. Once you’ve found the appropriate instance sizes, classify your instances as either dedicated (running 720 to 750 hours each month) or spot (not time-sensitive and activated based on demand).

The former, also called reserved instances, may qualify for steep discounts from the cloud provider if you can commit to running them for at least one year. The latter, which are appropriate only for specific use cases, can be purchased by bidding on unused AWS instances, for example. If your bid is highest, your workload will run until the spot price exceeds your bid.

You can take all the guesswork out of instance sizing by using the Morpheus unified orchestration platform, which automates instance optimization via real-time cloud brokerage. The service’s clear, comprehensive management console lets you set custom tiers and pricing for the instances you provision. All costs from public cloud providers are visible instantly, allowing your users to balance cost, capacity, and performance with ease.

4. Be realistic about cloud infrastructure cost savings. Making the business case for migrating to the cloud requires collecting and analyzing a great deal of information about your existing IT setup. In addition to auditing servers, components, and applications, you must also monitor closely your peak and average demand for CPU and memory resources.

Because in-house systems are designed to accommodate peak loads, data-center utilization can be as low as 5 to 15 percent at some organizations, according to AWS senior consultant Mario Thomas, who is quoted by ZDNet‘s Steve Ranger. Even if they’re operating at the industry average of 45 percent utilization, companies see the switch to cloud services as an opportunity to reduce their infrastructure costs.

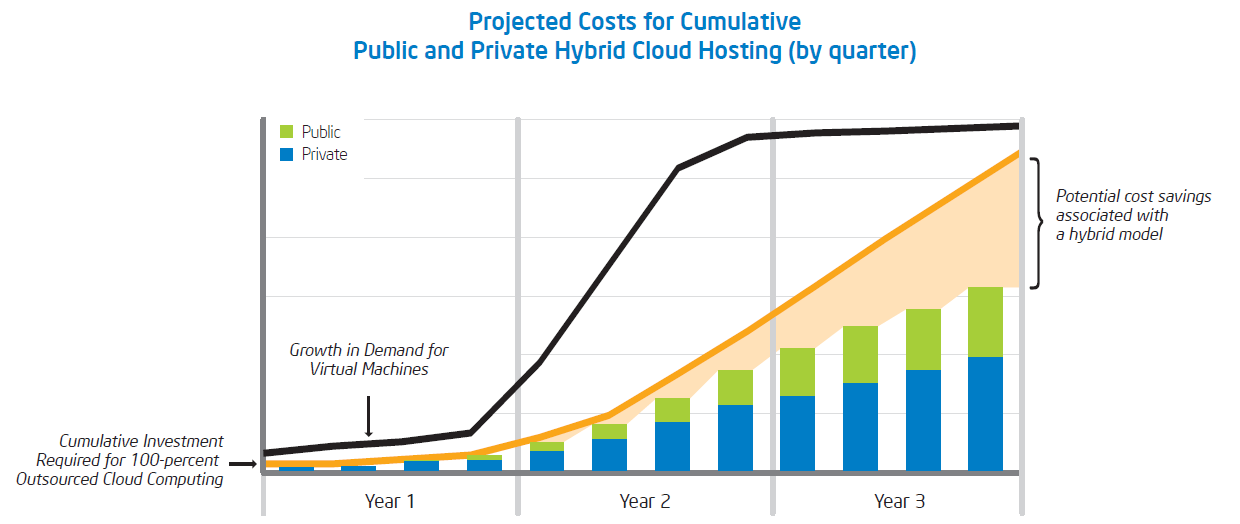

Nearly all organizations choose a hybrid cloud approach that keeps some key applications and systems in-house. However, even a down-sized data center will continue to incur such expenses as leased lines, physical and virtual servers, CPUs, RAM, and storage, whether SAN, NAS or direct attached. Without an accurate analysis of ongoing in-house IT costs, you may overestimate the savings you’ll realize from adopting cloud infrastructure.

Intel’s financial model for assessing the relative variable costs of public, private, and hybrid cloud setups found that hybrid clouds not only save businesses money, they let companies deliver new products faster and reallocate resources more quickly to meet changing demand. Source: Intel

5. Confirm the accuracy of your cloud cost accounting. Making the best decisions about how your cloud budget is spent requires the highest-quality usage data you can get your hands on. Network World contributor Chris Churchey points out the importance of basing the profiles of your performance, capacity, and availability requirements on hard historical data. One year’s worth of records on your actual resource consumption captures sufficient fluctuations in demand, according to Churchey.

Comparing the costs of various cloud services is complicated in large part because each vendor uses a unique pricing structure. Among the options they may present are paying one fixed price, paying by the gigabyte, and paying for CPU and network bandwidth “as you go.” Prices also vary based on the use of multi-tenant or dedicated services, performance requirements, and security needs.

Keep in mind that services may not mention latency in their quote. If your high-storage, high-transaction apps require 2 milliseconds or less of latency, make sure the service’s agreement doesn’t allow latency as high as 5 milliseconds, for example. Such resource-intensive applications may require more-expensive dedicated services rather than hosting in a multi-tenant environment.

6. Run your numbers through the ‘cloudops’ calculator. The obvious shortcoming of basing your future cost estimates on historical data is the failure to account for changes. Anyone who hasn’t slept through the past decade knows the ability to anticipate changes has never been more important. To address this conundrum, InfoWorld‘s David Linthicum has devised a “back-of-the-napkin” cloudops calculator that factors in future changes in technology costs, and the cost of adding and deleting workloads on the public cloud.

Start with the number of workloads (NW); then rate their complexity (CW) on a scale from 1.01 to 2.0. Next, rate your security requirements (SR) from 100 to 500, then your monitoring requirements (MR) from 100 to 500, and finally apply your cloudops multiplier (CM), from 1,000 to 10,000, based on people costs, service costs, and other required resources.

Here are a typical calculation and a typical use case:

Using the cloudops calculator, you can create an accurate forecast of overall cloud costs based on workload number and complexity, security, monitoring, and overall scope. Source: InfoWorld

In the above example, the use case totals $9.8 million: $8.75 million for workload number/complexity using a median multiplier of 5,000; $612,500 for security using a multiplier of 350; and $437,500 for monitoring using a multiplier of 250. Because you’re starting with speculative data, the original calculation will be a rough estimate that you can refine over time as more accurate cloud-usage data becomes available.

The greatest benefit the cloudops calculator provides is in opening a window into the actual costs associated with ongoing cloud operations rather than merely the startup costs. The “reality check” offered by the calculator goes a long way toward ensuring you won’t make the critical mistake of underestimating the cost of your cloud operations.

Get a jump on cloud optimization via Morpheus’s end-to-end lifecycle management, which lets you initiate requests in ServiceNow and automate your shutdown, power scheduling, and service expiration configurations. Morpheus Intelligent Analytics make it simple to track services from creation to deletion by setting approval and expiration policies, and pausing services in off hours.

Sign up for a Morpheus Demo to learn how companies are saving money by keeping their sprawling multi-cloud infrastructures under control.